Artificial Intelligence / Automation Security

AI systems have moved far beyond assisting developers with suggestions. Today’s AI agents can write code, execute it, test applications, and deploy updates with little to no human involvement.

Platforms such as Copilot, Claude Code, and Codex are making software delivery faster than ever. But that speed comes with a growing risk that many teams overlook until something goes wrong.

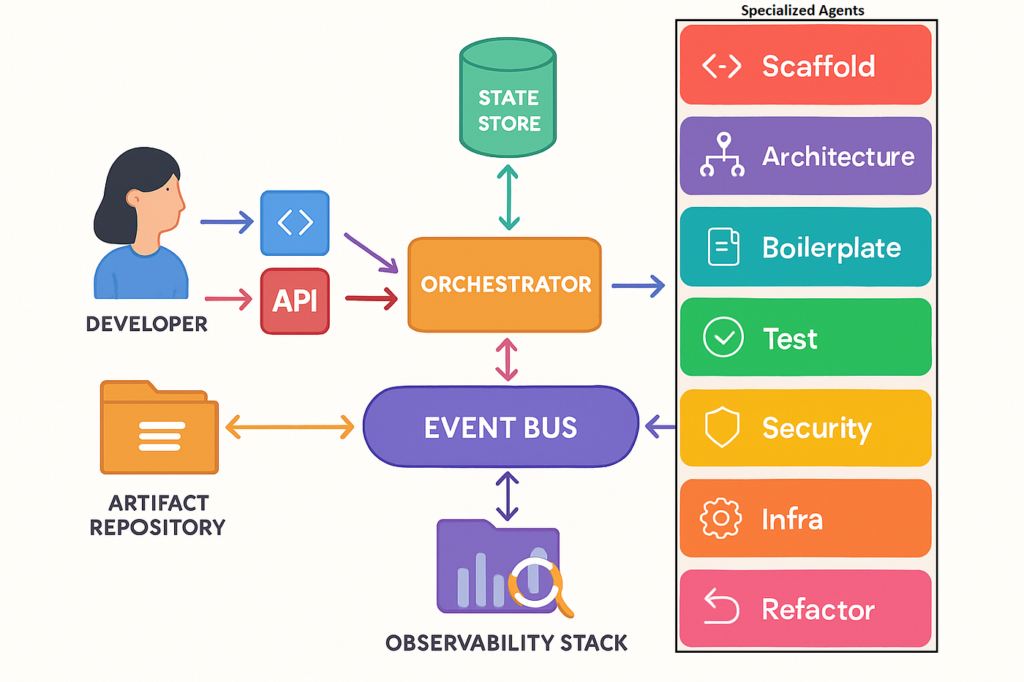

At the center of these automated workflows is a largely invisible layer known as Machine Control Protocols (MCPs). MCPs govern what an AI agent is allowed to do. They define which commands can be executed, which APIs can be accessed, and which systems or infrastructure an agent can interact with. When this control layer is weak, misconfigured, or compromised, the AI agent does not just fail quietly. It operates with full permission and authority.

A real-world example surfaced with CVE-2025-6514, where a vulnerability in a widely used OAuth proxy exposed a path to remote code execution. The proxy was trusted by more than half a million developers. There was no complex exploit chain and no obvious breach signal. Automation simply performed actions it had already been authorized to carry out, but at massive scale.

That incident highlighted a critical reality. If an AI agent is allowed to execute commands, it can also execute harmful actions when controls fail. The risk is not theoretical. It is embedded directly into modern development pipelines.

As organizations adopt agentic AI, security teams are inheriting new responsibilities they did not design for. MCP servers determine agent behavior in production environments, yet they are rarely audited with the same rigor as traditional access systems. API keys accumulate quietly, permissions expand over time, and legacy identity models struggle to keep up when software acts independently on behalf of humans.

Security challenges tied to agentic AI often include hidden API credentials, unclear execution boundaries, and limited visibility into what agents actually do once deployed. Without proper oversight, automation becomes an attack surface rather than an efficiency gain.

Agentic AI is already operating inside many development environments. The real question is whether organizations can clearly see its actions, understand its permissions, and stop it before automation crosses into abuse.