A serious security vulnerability has been identified in ServiceNow that affects its AI and virtual agent components, raising concerns about how agentic AI systems can be abused inside enterprise environments.

The issue impacts the following ServiceNow components and versions:

- Now Assist AI Agents (sn_aia): versions 5.1.18 and later, and 5.2.19 and later

- Virtual Agent API (sn_va_as_service): versions 3.15.2 and later, and 4.0.4 and later

The vulnerability, tracked as 2025-12420, was discovered and responsibly disclosed in October 2025 by Aaron Costello, Chief of SaaS Security Research at AppOmni. At the time of disclosure, there was no confirmed evidence of active exploitation, but organizations are strongly advised to apply the relevant security updates to reduce risk.

According to Costello, the flaw represents one of the most severe AI-related security issues uncovered so far. If exploited, attackers could effectively take control of an organization’s AI agents and turn them into tools for malicious activity, undermining the very automation systems designed to improve efficiency.

AppOmni’s analysis revealed that a weakness in the ServiceNow Virtual Agent integration could allow unauthenticated attackers to impersonate any ServiceNow user using only an email address. This flaw enables attackers to bypass protections such as multi-factor authentication (MFA) and single sign-on (SSO).

In a worst-case scenario, a threat actor could impersonate an administrator, trigger AI agents with elevated privileges, weaken security controls, and create hidden backdoor accounts. This level of access would give attackers the ability to persist within the environment with minimal detection.

The vulnerability stems from a combination of insecure account-linking logic and the use of a hardcoded, platform-wide secret. Together, these weaknesses allow attackers to sidestep identity safeguards and remotely execute privileged AI-driven workflows as any user within the system.

This disclosure follows an earlier AppOmni report published nearly two months prior, which highlighted how default configurations in ServiceNow’s Now Assist generative AI platform could be abused. In that case, attackers could leverage agentic AI features to perform second-order prompt injection attacks.

If chained together, these weaknesses could enable unauthorized actions such as copying and exfiltrating sensitive enterprise data, altering records, and escalating privileges across the platform.

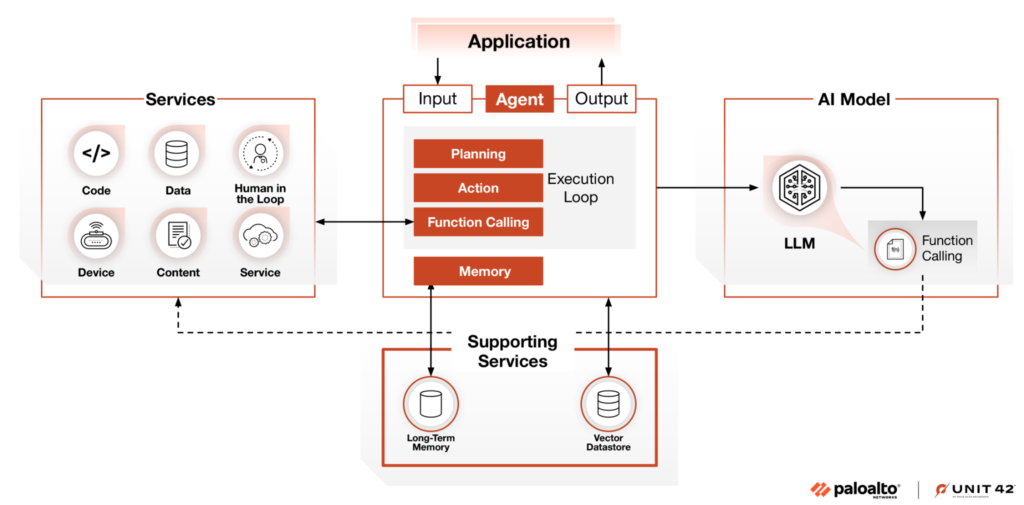

The findings highlight a growing challenge for organizations adopting agentic AI: while automation increases speed and productivity, insufficient controls around AI execution and identity enforcement can introduce powerful new attack paths if left unprotected.